This new technology permits to remotely control an automatic machine or a robot through a visual analysis of the operator’s postures. The system has been tested on two different use-cases: - in a first case, the system recognizes some postures of the user, each of them corresponds to the programmed movements, - in the second case, the system is controlled to perform a primary movement which is registered as a via-point for the construction of complex configurations, always based on the recognition of a pose.

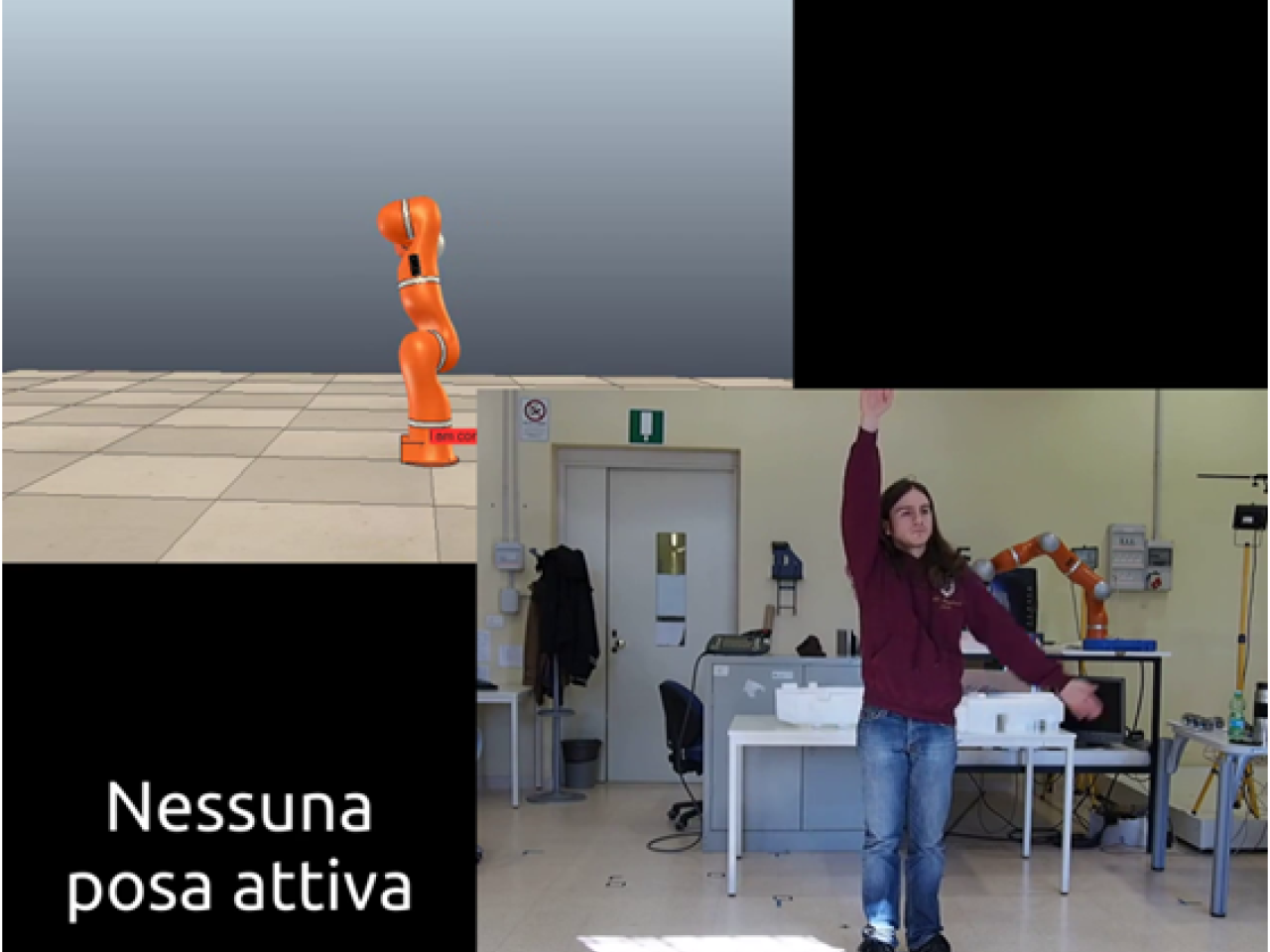

Simulator of the robot control system

Simulator of the robot control system

The system enables a remote control of a machine or robot via gestures performed with the arms. This is an innovative way of interaction between the machine and the human operator since the operator commands the machine without any contact. The system is innovative compared to the common mode of interaction, such as operator panels that can be used only with direct touch.

The system can be used to remotely operate automatic machines and/or robots. For example, an operator can remotely control the start/stop conditions of a machine simply with a wave of the hand. A second application is the learning of working trajectories of a robot by the mimic of the operator using a similar concept to the traditional ways "teach-pendant" but without limiting the operator's position.

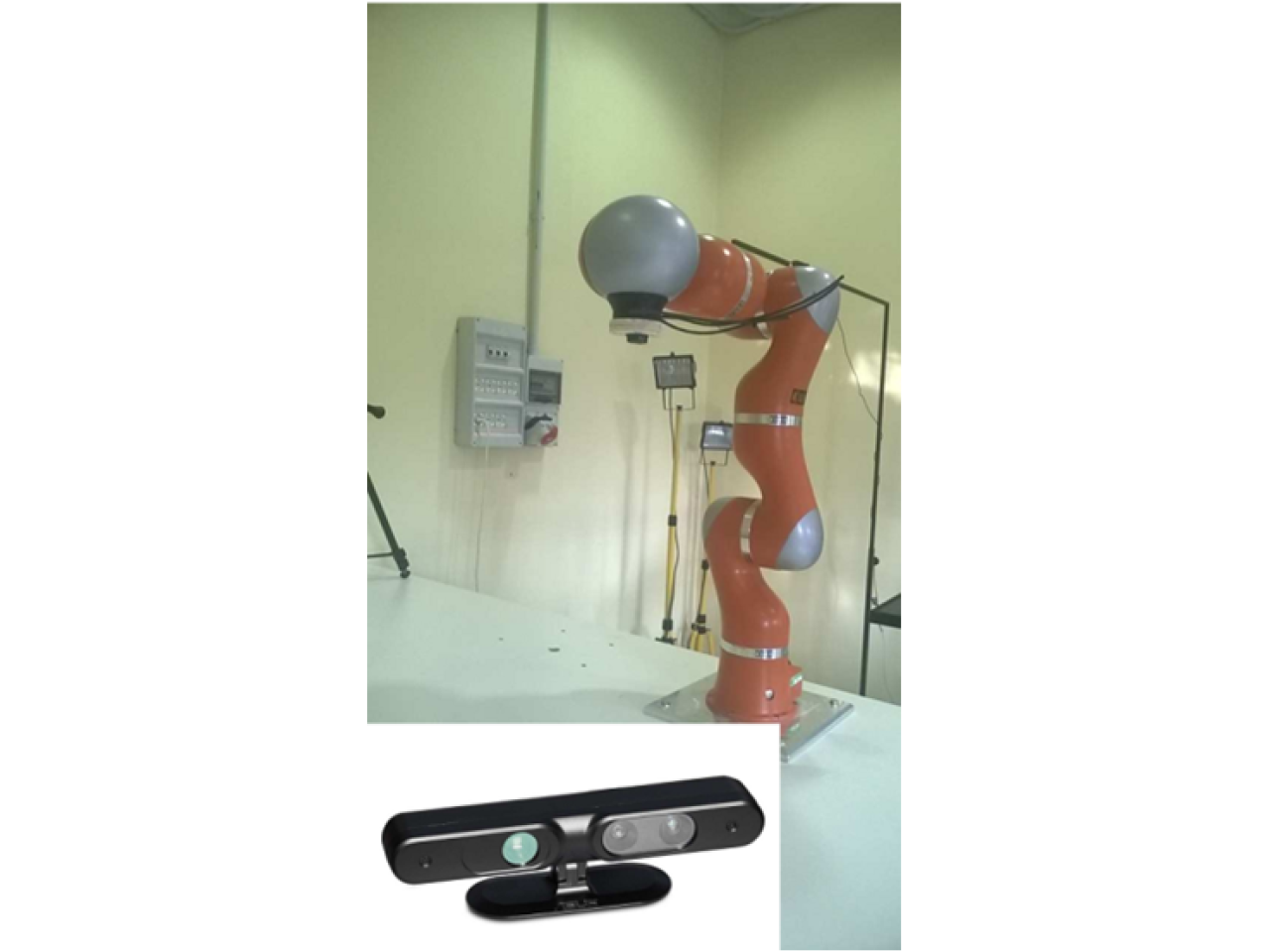

Remote control of a robot system

Remote control of a robot system

Remote control of industrial robots

The laboratory has developed an application of HMI (Human-Machine Interface) based on a Natural Interface, particularly on "Gesture Recognition" of specific static poses called "Static Gestures". The software environment on which was drawn this interface is ROS (Robot Operating System), the open source standard for programming and control of robotic systems. The "Static Gestures" are detected through the use of a motion sensing device, the Asus Xtion Pro Live, drawn with a dedicated software package and recognized through a program based on a recognition algorithm specially designed. The natural interface, totally hidden, allows the application to control a robotic arm to 7 degrees of freedom KUKA LWR 4+. A software interface is responsible for the generation of trajectories used to control robot position, acquired through the identification of simple poses gestural taken by the operator. The system is equipped with a V-REP simulator, for the off-line validation of the application in a virtual environment. The system was able to operate without recognition errors of poses for 10 consecutive hours of operation. The operator who conducted the tests showed comfortable and relaxing working conditions

Corghi S.p.A., Elettric 80 S.p.A., Clevertech S.r.l., Bema Automazioni S.r.l., OCME S.p.A.

The laboratory is looking for companies who want to build this application in their production and application scenarios. The enterprise ideal partner has characteristics of strong innovation and produce robots or automatic machines with intensive interaction with the human operator.

Camera and robotic manipulator.

Camera and robotic manipulator.